What OpenAI’s DevDay Means for the LMUX

When a customer wants a new capability, the first thing you do is build a feature in your app, but what if that's no longer the right thing to do? More and more work is being done with agents and in chat apps, so we decided to build there. At Theory, we're creating fully functional app experiences via language models; we call it a Language Model User Experience, or LMUX.

Building a LMUX has challenges and opportunities we’ve been thinking about:

- How should the language model interface with our business logic? Hint: MCP is the answer for now.

- If users are interacting with our app exclusively via language models, is there anything novel we can do with the information passing through that gateway?

At OpenAI DevDay 2025, OpenAI put its stamp of approval on the LMUX by launching the Apps SDK and AgentKit.

The Apps SDK standardizes how we connect to business logic (via MCP servers) and how we display that information and interact with it (via iFrames). The Apps SDK makes LMUXs possible without the heavy engineering previously required.

Previously, building UX in a chat interface meant first creating and deploying your own chat client before adding visuals, state management, or context handling.

With Apps SDK, you still need to consider the later challenges, but now you don’t have to build and deploy your own chat client.

This feels like an early move toward the physical device OpenAI is said to be developing. If OpenAI can get developers building AI native products with Apps SDK and users using those apps, they’ll get priceless insights into how users want to engage with the physical device they’re building.

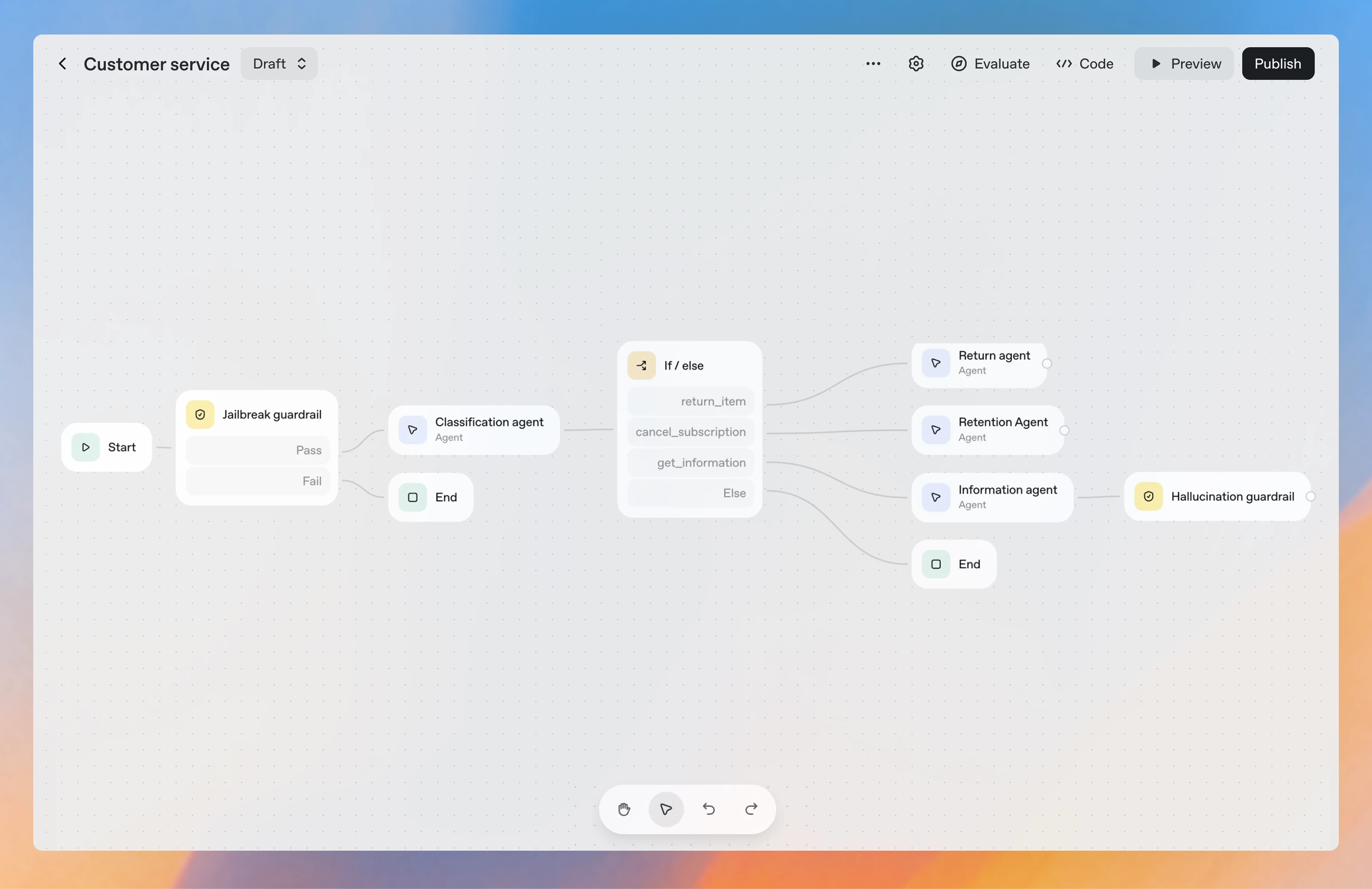

AgentKit is another critical piece of the LMUX architecture. Beyond the synchronous nature of the LMUX, users need to kick off asynchronous tasks and delegate work to agents. The MCP spec is working on asynchronous support, but it will still require managing your own infra, evals, and observability. AgentKit promises to handle all of that for you, plus a no-code workflow builder, guardrails for validating the workflows, and evals out of the box. This will make it a simple solution for delegating work to agents.

At Theory Ventures, we’re continuing to build LMUX experiences, and we’re excited to test out OpenAI’s latest products and share what we learn along the way. Are you building language model native products? If so, I’d love to hear about the cool things you’re building and the best practices you’ve learned along the way at adam@theoryvc.com!