LLM-market fit

I think about LLMs as creating a near-infinite supply of near-free interns. While they don’t have years of domain-specific expertise, they can be phenomenal at getting tasks done. Ask an LLM to summarize a memo or draft an email, and you could easily confuse its responses with a human’s.

How will businesses evolve when this is the case?

It’s clear that many workflows will change dramatically. But LLMs aren’t well-suited for every application. A sales team can gain real superpowers using LLMs to automate outreach. But a loan officer would find it unwise (not to mention illegal) to use an LLM to adjudicate home loans.

In our last blog, we explored how data moats will change with LLMs. What other criteria are important to identify the best business use cases for an LLM? As a founder or product leader, deciding which problem to tackle often comes from intuition and subject matter expertise. But we find probing the below six questions in depth can help test and pitch an LLM application:

- Is this a workflow where automation matters?

- Can LLMs do the job?

- Is the workflow tolerant of LLM non-determinism?

- Will it be served by a general LLM platform?

- Will it be served by an incumbent?

- Can the product build defensibility over time?

Note: There are many other questions that are important to answer for a B2B software business – market size, willingness to pay, founder experience, etc. This post focuses on questions specific to LLM systems. But the other ones are important too!

1. A workflow where automation matters

LLMs can make many jobs easier. If email automation saves me 15 minutes a day, I’d be happy. But solving a minor inconvenience does not make a big business.

What workflows have a burning need for LLM-based automation? Here are four key archetypes we’ve seen we’ve seen:

- Critical tasks are always in a backlog: Some teams are chronically under-resourced and see important items stuck in a backlog that’s too big to ever get through. LLM-based automation allows them to increase team-level output.Example: SecOps teams triaging and investigating security alerts

- Teams spend too much time on low-value, low-complexity work: Many teams see their skilled employees spending too much of their time on low-value tasks. Automating these frees them up to do higher-value, more complex work.Example: Engineering teams fixing simple bugs

- Tasks that LLMs can do better: With near-infinite capacity, LLMs can do more methodical and comprehensive research than humans have time to do, and can follow universal best practices that humans may not.Examples: Sales prospecting, legal discovery

- Workflows that benefit from immediate responsiveness: LLMs work 24/7 and can respond in seconds. For simple tasks where fast responses are key, they are invaluable.Example: Responding to customer or sales inquiries

In the longer term, LLMs will enable totally new workflows that aren’t possible today. A sales automation platform might respond immediately to inbound requests with a customized, interactive pitch. A security automation platform might detect a vulnerability and immediately patch it or update configurations.

2. LLMs can do it

After identifying a workflow, the first question to test is simple – can an LLM do the job?

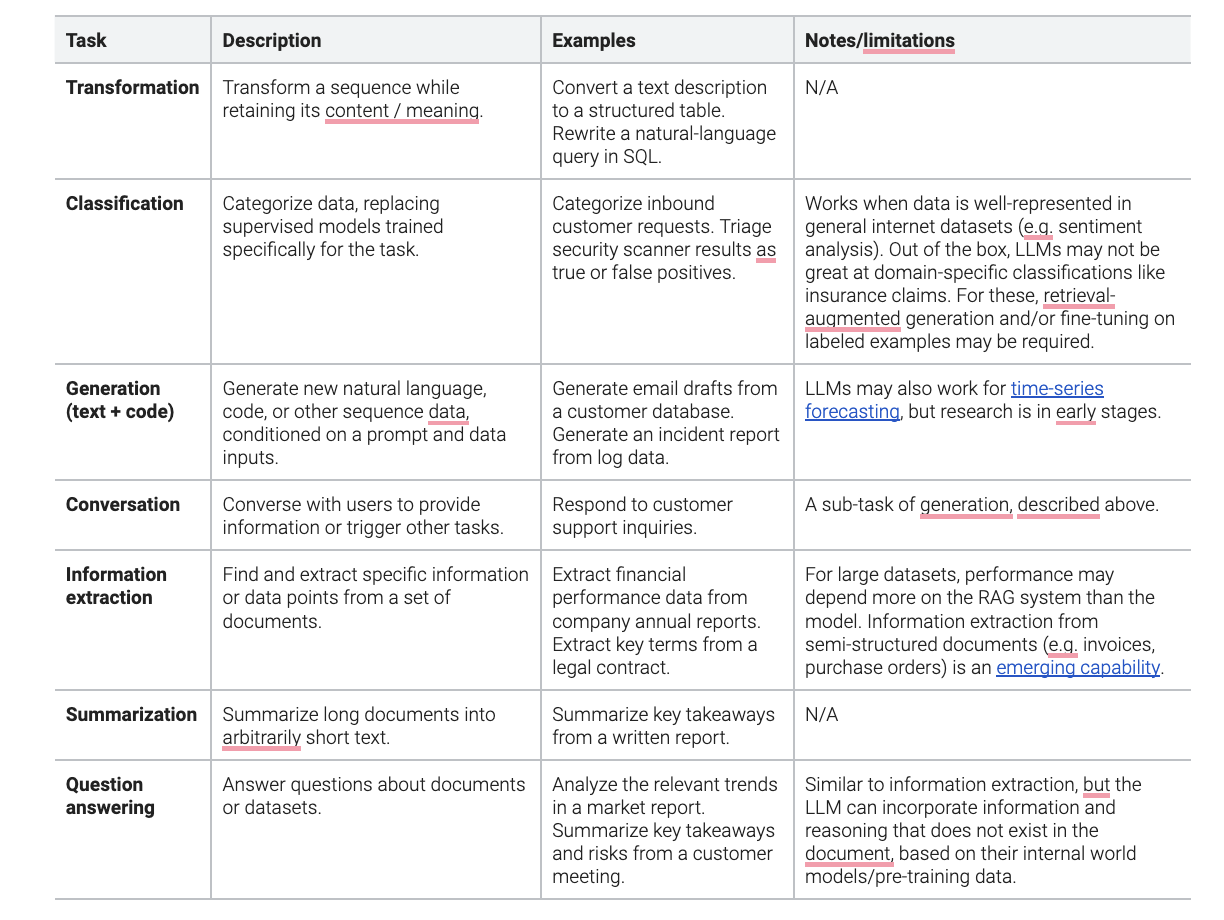

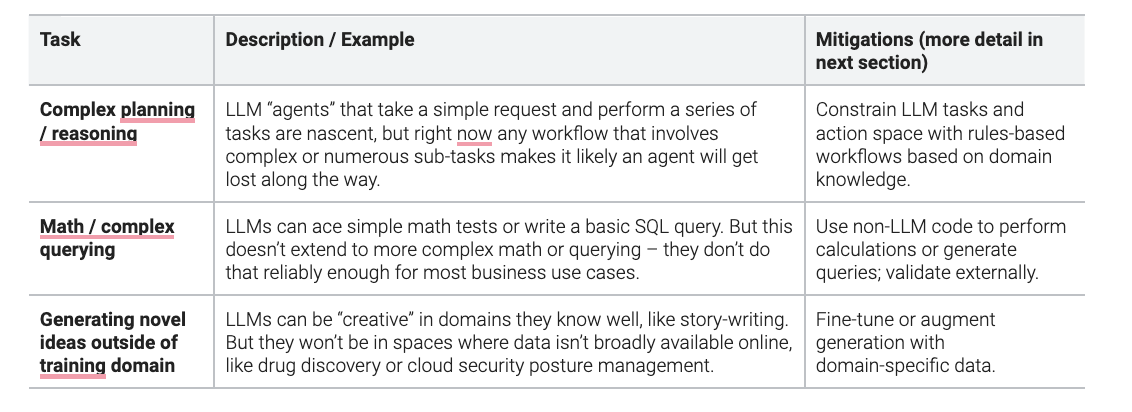

First, we look at each of the specific tasks in a workflow. Is it composed of tasks LLMs do well? Or does it require steps that we know LLMs can’t do reliably yet?

Tasks LLMs can do well are:

Today, LLMs are generally not great at :

3. A workflow that’s fault-tolerant

LLM behaviors are unpredictable. Because LLM output tokens are sampled probabilistically, the same input will generate a different response each time. And if the input data varies slightly – query to query or over time – a model may fail at a task it once performed well.

While LLMs can do many business tasks reliably, they’re never going to work 100% of the time. For any LLM application, it’s critical to understand how a workflow will respond to a failure.

Consider these two potential LLM applications in marketing:

- A team uses an LLM to help it brainstorm new messaging for a campaign. A system that gives 9 great recommendations out of every 10 is almost as valuable as one that gives 10 great recommendations. Each good example is useful, and it’s easy to toss out the bad one.

- Now that team wants to use an LLM to respond to tweets referring to the company with a custom reply. If 9 out of 10 responses are great but the 10th is wrong or harmful, the system is almost worthless. The risk is too high, and double-checking each message manually would negate the benefit of automation.

What workflow characteristics make it more likely an LLM can do them reliably?

1. For complex applications, the best LLM systems will have expert-defined workflows and orchestration. These systems use LLMs for what they’re best at, with logic, prompting, information retrieval, and external models handling the rest.

An LLM tasked to simply “research this company” will produce something, but of variable form and quantity.

Instead, a well-designed LLM workflow will have an orchestrating system with dozens or hundreds of points of guidance. “Extract data from this financial statement.” “If margins decreased by more than 5%, search call transcripts for mentions of costs.” “Look at competitors’ annual reports for mention of the target company.” And so on.

2. Workflows are more reliable when an LLM can be validated programmatically. Code can be evaluated for syntactic correctness or run to test for compilation issues. Workflows can build in heuristics-based guidelines, e.g., “the extracted value should be a dollar amount between $1,000 and $100,000” or “the report should be between 150-200 words.” Last, another LLM with specific prompting can judge if the model output passes muster.

External validation makes sure that LLM outputs won’t break other parts of the system. The same feedback can also be given to the LLM to self-correct its output.

3. Many business applications will benefit from fine-tuning or custom model development. This can help provide domain-specific knowledge/vocabulary and ensure outputs are properly formatted. Reinforcement learning from human feedback, another type of fine-tuning, can help a model understand that a workflow benefits from detailed responses versus a vague one.

What makes a workflow more fault-tolerant to an LLM failure?

1. A workflow with natural opportunities for human input while maintaining the benefits of automation. These workflows typically:

- Have tasks that are time intensive to do but quick to review: for example, drafting a meeting summary

- And/or can be accomplished with interactive/copilot style workflows: for example, investigating a threat or service outage

2. A workflow with relatively low business cost of making a mistake. Any use case that increases liability (e.g., loan or medical decisions) or has a high direct cost (e.g. payment automation) is not well-suited for LLMs.

Workflows with a low cost of a mistake typically have a way of remedying an error. A user talking to a customer success bot can always call for a human agent if the chatbot isn’t helpful.

4. General LLM platform can’t serve it

As foundation model providers expand capabilities, they will serve more business workflows that are simple and globally applicable. Use cases like simple document summarization will become commoditized.

We look for two characteristics that indicate a workflow will best be served by an application-specific team:

1. The application is complex and domain-specific. To do it well requires a web of workflow-specific inference and information retrieval logic, as described above.

2. The application requires use case-specific context data, e.g. historical user activity or integration with third-party systems

5. Incumbents can’t serve it

On the other side of the competitive spectrum, some LLM features will be best served by incumbent software providers. New entrants face an unfair playing field as incumbents often have distribution, relationship, and data advantages.

What makes an opportunity where new entrants should win? We again look for two primary drivers:

1. An LLM-powered workflow is so different from what exists today, it will require an entire re-architecting of the product to support it. The incumbent’s existing product, infrastructure, and data aren’t relevant in the new paradigm.

Example: Accounting is dominated by decades-old software where users click through legacy ERP systems. LLM-enabled accounting software will require new types of user interfaces and workflows that don’t fit into legacy software. Legacy ERPs have lots of historical data, but not on the new user behaviors that would emerge in an assistant/automated paradigm.

2. The incumbents don’t have the talent or the incentive to build an effective LLM-based system. LLM-based automation might cannibalize existing revenue streams or decrease competitive moats. Mature companies may not have the talent to redesign the product for a new paradigm.

Example: A product that automatically fixes security vulnerabilities in application code for enterprises should be platform-agnostic, to work with the variety of vulnerability scanners and development platforms. Existing platforms might build in some automation features, but are unlikely to make a neutral application that could commoditize their current product.

6. System and product-driven defensibility

As discussed in our last post, LLMs change the shape of data moats.

Companies can no longer assume their model will remain a defensible asset.

Instead, the best LLM applications will provide the opportunity to build data moats in the surrounding LLM stack:

- Provide richer context and build better information embedding, storage, and retrieval systems

- Improve LLM workflow design and orchestration logic

- Capture edge cases to optimize the end-to-end system

LLM applications will also benefit from traditional product moats, such as:

- Accelerate new feature development with user feedback

- Improve interfaces based on product usage

- Get customers to design workflows around your product

- Integrate with third party systems and services

- Become a system of record

Conclusion

LLMs provide a powerful new building block for business applications. It’s tempting to try to apply them everywhere.

But there are a specific set of characteristics that make a B2B workflow well-suited to a new LLM application. The workflow must be one that LLMs can do well, and be a good fit for LLM’s non-deterministic behavior. It must be novel and complex enough that it won’t be served by a general LLM platform or incumbent software provider. And it must allow for system and product differentiation to grow over time.

In upcoming blog posts, we’ll start to dive into specific application areas we’re excited about.

If you’re building an LLM-powered B2B application, we’d love to hear from you at info@theory.ventures.