Never Forget What I Said: Building a Data Lake of Every Call

AI notetakers are a dime a dozen, so what pushed us to make our own?

At Theory, we meet hundreds of times per month to discuss markets, opportunities, strategies, and internal tools. With so many conversations, critical insights can get lost—buried in personal notes, scattered across individual transcribers, or, in the worst case, never captured at all.

We needed a transcription system that could sift through the clutter and make our data actionable. A system that could quickly surface insights from transcripts, bring forward relevant past conversations when reviewing company records, and turn discussions into meaningful to-dos, routing them to the correct teammate.

Designing our own notetaking pipeline allowed us to customize these workflows exactly how we needed.

So, how did we do it?

Anatomy of Transcription

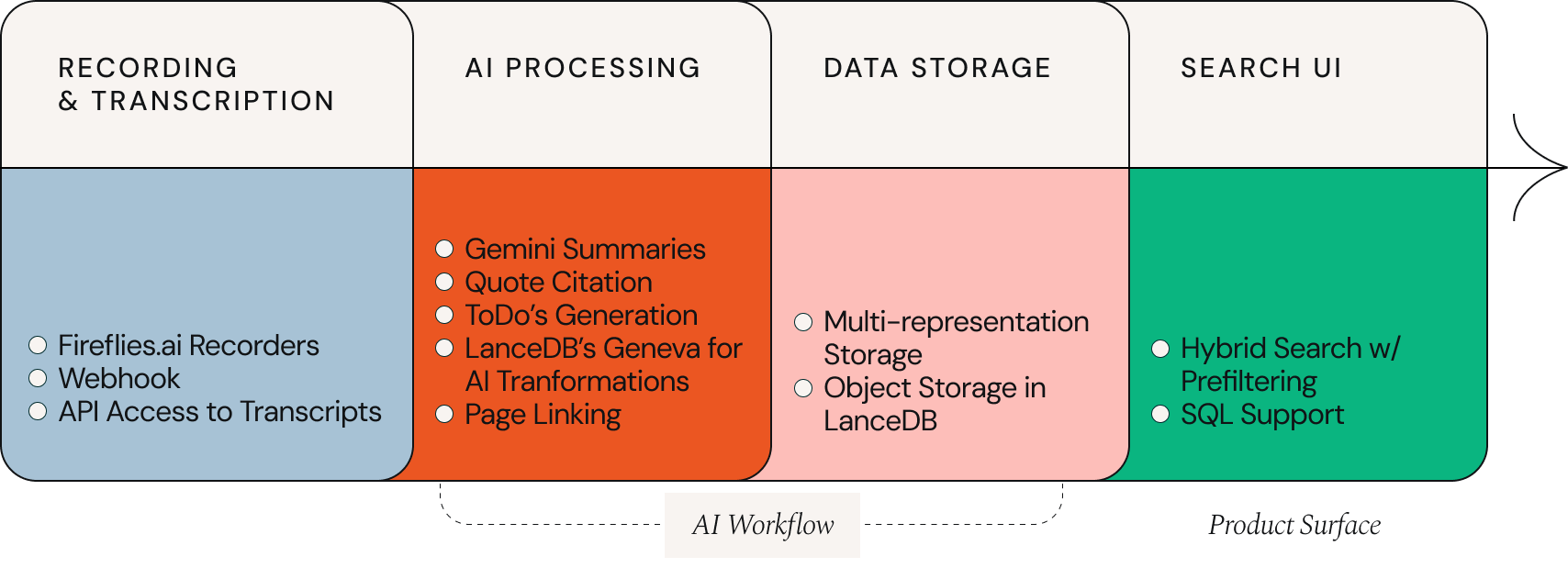

Our stack consists of four parts:

- Recording & Transcription: Fireflies.ai for its full API access, webhook support for real-time processing, and structured data output.

- AI Processing: Google Gemini for summarization with citation and LanceDB Geneva for transformations on data columns.

- Data Storage: LanceDB to store semi-structured data from the calls, including the initial transcript, embedded chunks, and extracted features like to-dos and company connections.

- Search UI: LanceDB’s built-in hybrid search with pre-filtering for quick information retrieval.

Together, these layers turn raw conversations into structured, searchable knowledge.

Our First Hiccup: Meeting Deduplication

One of our first technical hurdles emerged from a simple scenario: when multiple team members attend the same meeting, several unique webhooks are triggered. Without proper deduplication, our system would process the same meeting multiple times, creating:

- duplicate transcripts and insights,

- redundant notifications to the team, and

- unnecessary API costs and processing overhead.

The Solution: Cloud Tasks with Deterministic Keys. This approach eliminated duplicate processing while maintaining zero-latency webhook responses. The leading insight was that Cloud Tasks’ built-in deduplication handles the complexity of distributed state management, while our deterministic key generation ensures semantic equivalence across multiple webhook sources.

Linking Company Data with NER

During each meeting, a significant portion of our insights, to-dos, and follow-up research revolves around specific companies. Directly linking company records to relevant transcripts and documents greatly enhances our ability to organize and retrieve information. Identifying these connections is a classic NLP challenge known as Named Entity Recognition (NER).

To address this, we combined multiple tools with our existing infrastructure to automatically detect potential named entities within transcripts. These entities are then linked to corresponding company records through a search over our company database—a system similar to what underpins our broader universe of companies.

LLM Capabilities That Work

The heart of our system is the summarization and extraction pipeline. Rather than asking the LLM to directly generate a summary, we designed a structured thinking process that guides the model through thorough analysis before synthesis.

The prompt first instructs the model to classify the meeting type, distinguishing between structured recurring meetings and anything ad hoc, enabling context-appropriate summarization strategies. For example, during pipeline meetings, we emphasize action items and diligence follow-ups. This flexibility allows us to quickly modify the prompts we use for these extractions.

To ensure reliability, we implemented a robust citation system that links every claim and to-do in the summary back to specific moments in the transcript. The LLM is instructed to use citation tags that reference line numbers where supporting evidence can be found.

We provide these summaries as Notion pages and utilize the block-level linking system to give users a powerful UI to explore the call notes and summaries.

Meeting to Meaning

The workplace is moving toward consolidating data within organizations, making it easier to search, share, and use across multiple systems. While off-the-shelf notetaker tools can cover the basics like transcripts and email follow-ups, we chose to build something deeper. Our approach allows us to seamlessly connect this data with the rest of our internal tooling, even integrating with our team’s MCP server. Individual meeting transcripts become part of a larger, living dataset that links directly into our existing knowledge graph.