Exploring the LLM Infra Stack, Part 4: The Interface Layer

4. Interface Layer and conclusion

This is the final post in our 4-part series on the LLM infra stack.

You can catch up on our last three posts on The Data Layer, The Model Layer, and The Deployment Layer.

The Interface layer

At their core, all LLMs do is take sequences of text as input and generate sequences of text as output. But the most exciting applications of LLMs will see models not just generate text but act as agents that interact with the world.

An LLM personal assistant will book dinner reservations.

An LLM security analyst will fix permissions on a misconfigured cloud instance.

An LLM accountant will send invoices and close the books at the end of the month.

We have seen some early examples of LLMs taking action with plugins and general agent frameworks like AutoGPT.

LLM agents today are limited by model capabilities. If you ask them to do a multi-step task (e.g., finding your existing dinner reservation, canceling it, searching for a new restaurant, and booking that), they’ll often get lost along the way.

But this is a short-term problem. Foundation model providers are rapidly improving LLMs’ ability to interact with other software and services – e.g., generating reliable API queries and JSON outputs. Models are also being trained to perform better long-term planning and reasoning.

However, agents are also limited by a lack of robust user and third-party interfaces. People often think of API and user interfaces as separate categories, but here we see the problem as shared:

LLMs need robust interfaces and tools to interact with the world. This is what will transform them from text-generation machines into the operating system that will power the next generation of software.

Let’s dive in!

Third-party application interfaces

LLMs will interact with third-party applications to perform actions for a user or search for additional information to accomplish a task.

Imagine using a chatbot to plan a vacation. The application shouldn't just list a set of flight options – it should allow the user to book a seat and rent a car at the destination.

In the B2B world, a marketer might use an LLM to generate social media content. They shouldn’t have to copy and paste it into each channel – the LLM platform should do it for them automatically.

Over time, many platforms will release APIs designed specifically for bot/agent access. However, there are still major opportunities for new companies to facilitate these interactions:

- It’s time-consuming to build one-to-one API connections with each airline or social media platform. A single integrated platform that’s kept up-to-date with the latest API releases will be more convenient for developers.

- API requests will often need to be pre-processed to transform and standardize inputs/outputs in a way that makes them easier for LLMs to interpret.

- API requests will need to be authenticated – while many platforms are actually designed to detect and block bot traffic today.

Other applications might see LLMs interacting with software that doesn’t have available APIs. The LLM travel app can probably integrate directly with the Marriott API, but if you stay at a local bed and breakfast, it might need to click through their website.

These apps will need tooling for LLMs to navigate your computer or web browser, return data in a way the model can understand, and allow it to take action.

User interfaces

Today, we are in an era where most companies bolt LLMs onto their existing products and interfaces. But LLMs enable totally novel user experiences – what will true LLM-native applications look like?

Early LLM data analysis tools use a chat interface. What if the user wants to modify a table directly to demonstrate their desired transformation instead of describing it in text?

Early LLM email productivity tools generate drafts in the editing window. What if the LLM email client of the future has an agent that runs on autopilot, where the user just has an ‘inbox’ of exceptions and messages to review?

We are early in this world, but it’s clear LLMs will drive an explosion of new user interfaces and experiences.

User interfaces will also be a key source of user-generated data to feed into observability and product analytics platforms. Thoughtfully designed interfaces will generate the best data to improve an LLM system over time.

Many of these novel interaction patterns will be built by new LLM applications as product differentiators. However, many applications require similar front-end components and interactions. There is an opportunity for a new set of front-end infrastructure companies that are designed for LLMs.

Key open questions

- As platforms release LLM agent-friendly APIs over time, will they disintermediate standalone third-party providers of application interfaces?

- Will LLM user interface infrastructure accrue value as a standalone company? For many companies, this will be their core product differentiation. Alternatively, UI elements might be best served by an existing model development or front-end libraries.

Companies working on the interface layer

- Third-party application interfaces: Anon, Recall.ai, Bruinen, Induced AI, Reworkd

- User interfaces: xPrompt, Inkeep

Conclusion

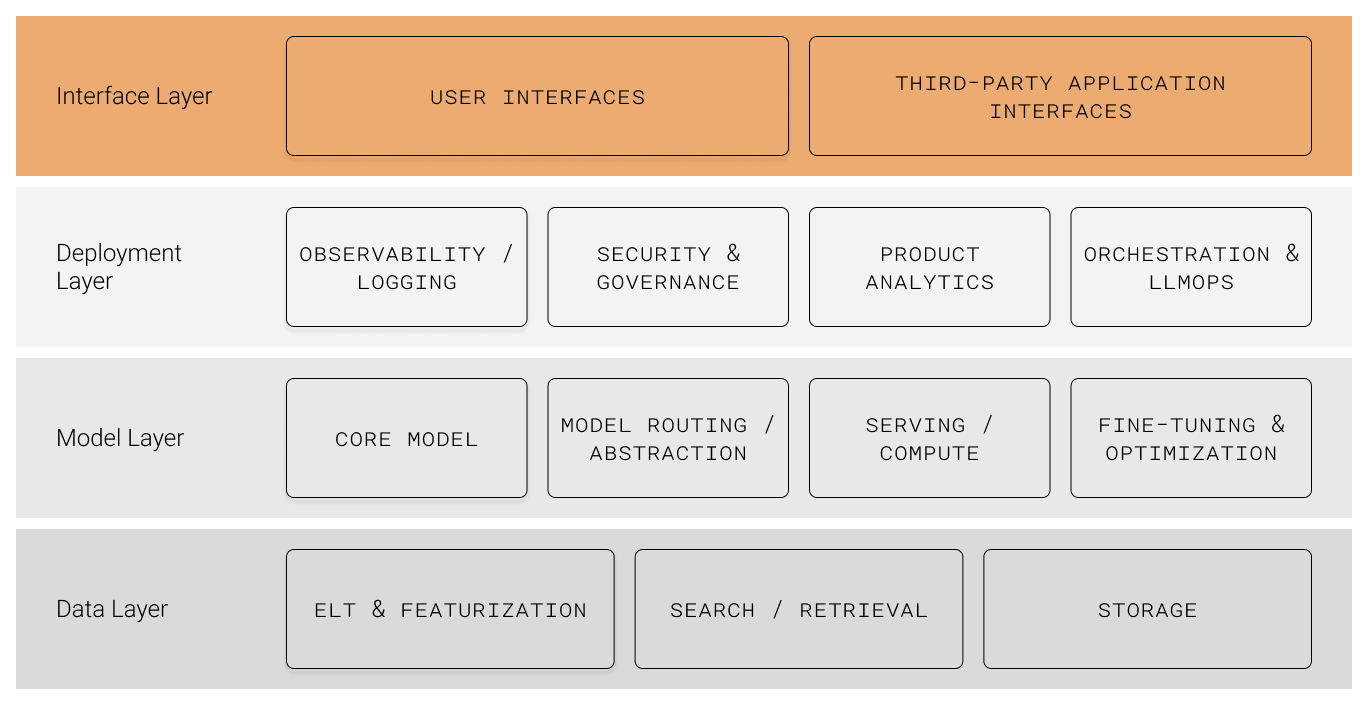

Here are the key takeaways from our LLM infrastructure research:

Practically all LLM applications need an effective Data Layer to featurize, store, and retrieve relevant information for the model to respond to a user query (while making sure it does not have access to data it shouldn’t).

Depending on the application, companies will use a mixture of self-hosted and managed service models. Many use cases will see cost and performance benefits from fine-tuning a model. For anyone not relying entirely on a foundation model-managed service, the Model Layer will require critical infrastructure to manage model routing, serving/deployment, and fine-tuning.

The unpredictable nature of LLM behaviors means post-deployment monitoring and controls are essential. The Deployment Layer will need to include security/governance instrumentation across data, model, and observability platforms. Observability/eval and product analytics tooling will enable ongoing monitoring and ad-hoc analysis. As LLM actions become more complex and multi-step, orchestration will be increasingly important.

Last, LLMs will require interfaces to interact with the world. Third-party interfaces will provide the means for LLMs to reliably navigate and perform actions in other systems. User interfaces will enable the next generation of LLM interaction patterns.

We are at the very early stages of designing this production LLM infra stack. There are over 100 early-stage startups tackling this opportunity, and we believe multiple will become multi-billion dollar companies.

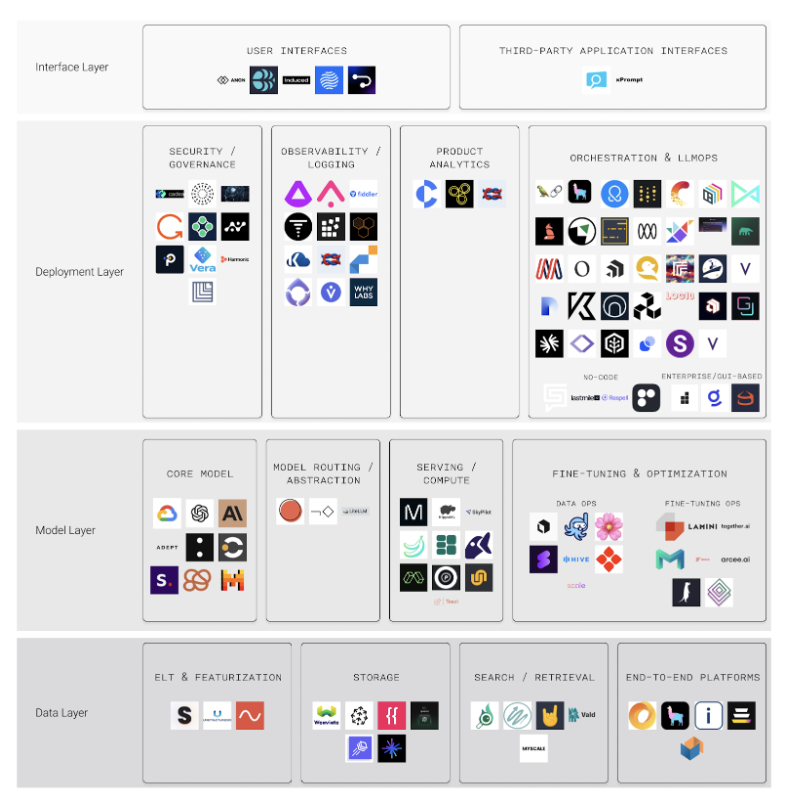

Below is a market map of the full LLM infra landscape. It’s not comprehensive and hard to put many of these companies into a single category. If you’re building in this space, we would love to hear from you.

Thanks to the many folks who provided input on this article series and to Ben Scharfstein, Dan Shiebler, Phil Cerles, and Tomasz Tunguz for detailed comments and editing!