Theories

Stay up-to-date on our team's latest theses and research

Stay up-to-date on our team's latest theses and research

“When the cost of creating things approaches zero, where does the value lie?” Earlier this month, we met in Berkeley, California, to talk about AI workflows—not in the abstract, but real ones people actually run every week. AI automations crafted by the user themselves, not a disjoined stakeholder’s ticket. These demonstrations of ‘personal software’ captured a preview of the future that so many folks see coming. Three friends opened their laptops and showed us how they’re using models as patient, tenacious co-workers: capturing information, keeping creative work on the rails, and taming calendars and inboxes to create more time for human work.

Backing up, this event was conceived by Hamel Husain and I to sate his frequent inquiries: ‘how are you using AI’, ‘tell me about your workflows’, and ‘are you using any new AI tools this week?’. We wanted to craft a show-and-tell with some of the folks living in the future to tell us what’s actually saving them time, or expanding their aperture of focus.

Tomasz Tunguz went first and made a strong case for compressing the firehose. Tomasz leads Theory Ventures as its General Partner, and his workflow is designed to support that. He’s built a pipeline that watches a slate of podcasts, turns speech into text, then synthesizes what matters: the three or four themes worth caring about, the quotes that actually support those themes, and even a “contrarian take” prompt emulating Peter Thiel’s strategy. The punchline isn’t a dashboard—it’s a daily email he can read in fifteen minutes instead of forty hours listening.

Hamel responded by asking if any of these podcasts are ‘incompressible’; in a testament to the fidelity of this system, Tom admitted that he rarely “goes back to listen”; but he does interrogate the transcripts, asks targeted questions against them, adds additional research queries, and collates follow-ups into a task list so the research yields outcomes. Along the way, the system quietly flags net-new companies and pushes them into the CRM, integrating into the rest of the firm’s virtuous cycle.

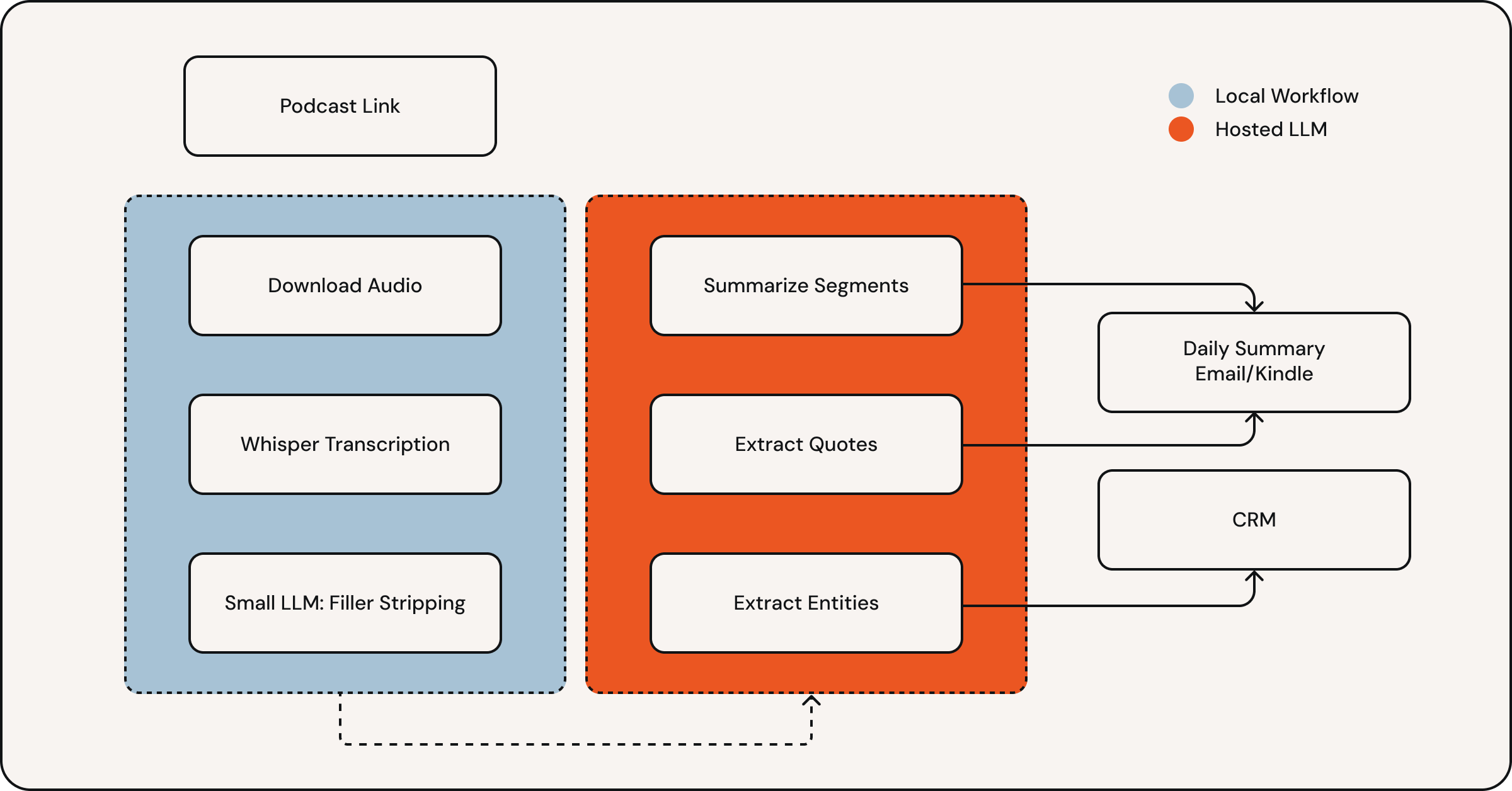

Tom’s pipeline starts with the audio. He pulls episodes down locally, runs Whisper on his GPU to transcribe (cheap and fast), then passes the raw transcript through a small local model whose only job is to strip filler—ums, uhs, throat-clears—so the expensive part that follows stays lean. In his words, input volume drives 80–90% of LLM cost, so the pre-shrink matters. With the text compacted, he hands it to a “real” model (Claude Sonnet) that executes a long prompt: split the episode into 5–15 minute segments, summarize each segment’s argument, lift direct quotes, and extract entities (people, companies) that might deserve a look. From there, a little glue kicks off a downstream routine to check those entities against the CRM and create records for the net-new ones. The outputs are deliberately boring and useful: a daily summary email for him, plus a PDF to his Kindle; behind the scenes, the system often surfaces a couple of new startups worth tracking. He’s running it as a local CLI right now to keep iteration tight while he gets the loop right.

Greg Ceccarelli followed by zooming in on intent; his workflow is incredibly spec-driven, communicating the objectives and not the tool directions. He showed a code-driven video workflow where scenes live as components, the timeline updates the instant you change a prop, and “edits” are just commits. It’s the opposite of pixel-pushing: your source of truth is the spec and prompt. One of Greg’s keys to success is monitoring and iterating on the trail of chats that led to the code. Via a tool his company built, he’s able to store the upstream conversations in addition to the generated files.

Greg also introduced the term “dead looping” for that familiar feeling of issuing the same prompt three times and waiting for the model to suddenly become insightful. He advised changing the frame, starting a new session, and resetting the context that’s poisoning the agent.

Finally, Claire Vo closed the loop by putting agents on the calendar and content grind. Claire seems to have conquered the “Sunday Scaries”: an agent that walks three calendars, pulls event names and attendees, checks email to remember who a meeting is with and why, and spots places to consolidate time. In case you are worried these tools lack heart, this tool auto-blocks a morning window to walk her kids to school if the day allows it. The brief lands in Slack; automation that sharpens the edges of the start of the week.

Similarly, her content flow reduced the frictional steps of producing a podcast. Feed a recording and transcript into a generator primed on your own archive to get a draft that’s 80–85% there, getting her to the part of the workflow that requires real taste.

Tomasz, Greg, and Claire provided varying perspectives, but all with an underlying theme: selection, specification, and synthesis. Some practical recommendations are to start small: pick one place where you already spend energy—the media you consume, assets you produce, logistics that drain you—and sketch the loop you wish existed. Capture, compress, and connect it to the surface where you’ll actually act. Then turn the crank once.

“All teams will henceforth expose their data and functionality to LLMs, and anyone who doesn't do this will be fired.” - Jeff Bezos (theoretically)

Jeff Bezos famously mandated this for web services when starting AWS, and it would be a likely update for the LLM era.

When building for developers, this discipline made AWS services “externalizable by default,” propelled Amazon’s platform strategy, and helped cement microservices as modern dogma.

When building for LLMs, this discipline means having the right context from a variety of systems easily accessible. Although they are incredibly powerful, LLMs are only as intelligent as the context they have.

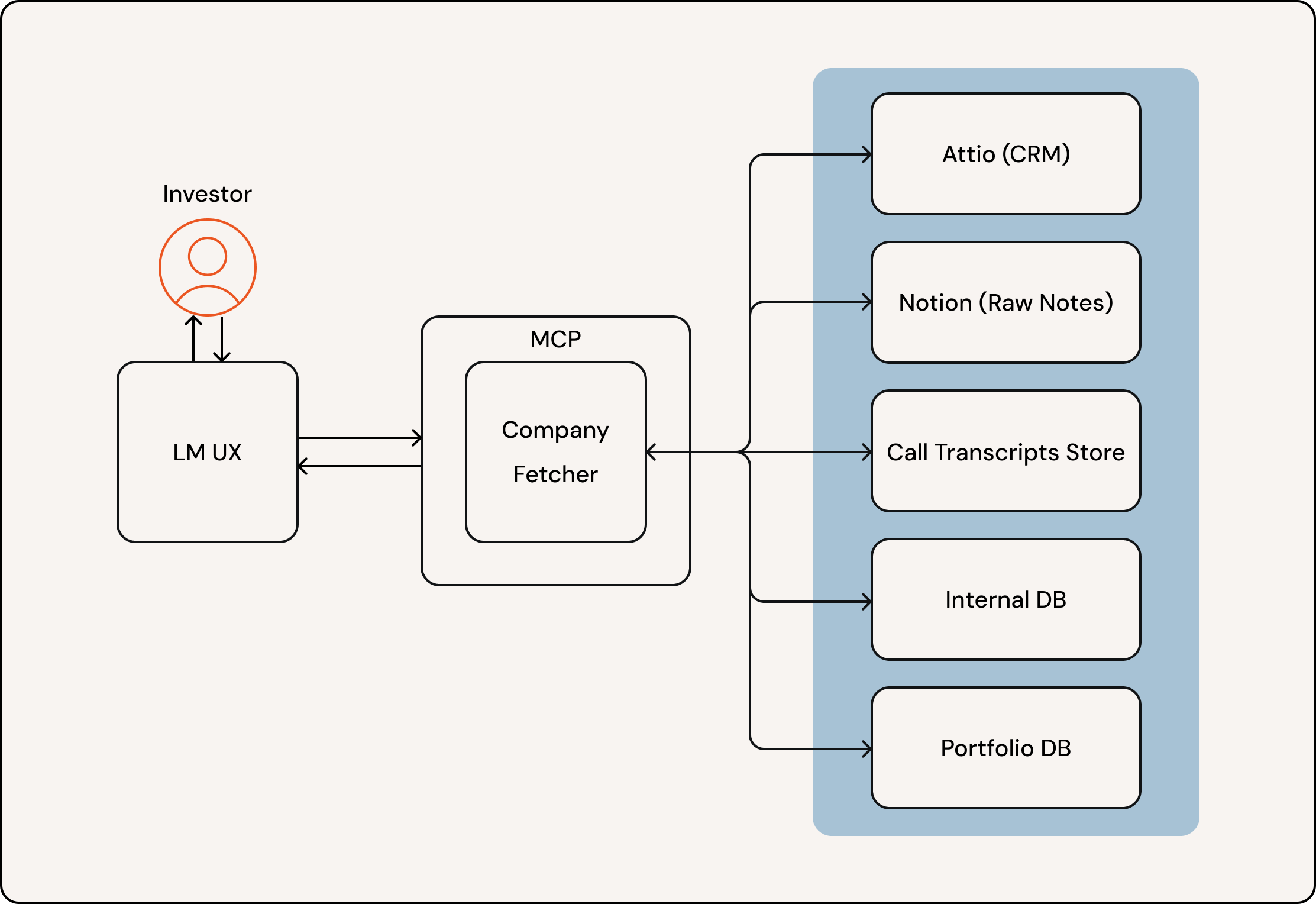

At Theory Ventures, we’re investors, but we’re also all builders, creating internal software to move fast. Our goal: answer investor questions about any company in seconds: from “What’s new since the last call?” to “Show revenue by cohort” to “Summarize the last three meetings.”

As a simple example, let’s consider an investor writing a company memo to share internally; information about the company is comprised from several different sources:

Remembering and managing all of these different data sources and copying them is a lot of work, but what if all that context could be available to the investor’s LLM just by mentioning the company name?

Model Context Protocol (MCP) has emerged as a simple and robust way to give LLMs the right context. MCP is a standardized protocol that empowers LLMs to connect with data sources, tools, and workflows.

A well-designed MCP enables users and agents to complete their work wherever that work is happening: in chat or in a container. MCP is intentionally boring in all the right places: predictable schemas, explicit descriptions, and a clean contract between the system and the model.

We deploy an MCP server on FastMCP Cloud so the LLM client can call it from anywhere without custom infrastructure.

LLMs don’t magically know our world. They need:

So we exposed one MCP tool that the model can reason about: company_context. Given a company name or domain, it returns a structured summary with IDs, core metadata, notes, historical activity, and (when applicable) financials. Internally, this tool orchestrates multiple services, but the LLM only sees a single, well‑documented interface.

What the tool returns at a high level:

Here’s the company_context tool’s internal orchestration:

# Pseudocode – orchestrating a company context query

def get_company_context(company_name: str):

company_id = get_company(company_name)

core = fetch_core_company_data(company_id)

notes = fetch_notion_pages(company_id)

history = fetch_historical_data(company_id)

financials = None

if core.get("is_portfolio_company"):

financials = fetch_financials(company_id)

return serialize_company_data(core, notes, transcripts, history, financials)

This tool returns a compact, documented schema so the model knows what it returns and how to consume it. For example:

{

"name": {

"value": "Company A",

"description": "Public-facing name of the company"

},

"id": {

"value": "123444ee-e7f4-4c9f-9a4e-e018eae944d6",

"description": "Canonical company UUID in our system"

},

"domains": {

"value": ["example.com", "example.ai"],

"description": "Known web domains used by the company"

},

"notion_pages": {

"value": [

{"page_id": "abcd1234", "title": "Intro & thesis", "last_edited": "2025-07-28"}

],

"description": "Notion pages with analyst/investor notes"

},

"is_portfolio_company": {

"value": true,

"description": "Whether the company is in our portfolio"

}

}

This isn’t fancy agent pixie dust; it’s just clear contracts that let the model get access to the context it needs without human input.

If you remember only one thing, make it this: Expose your service’s core functionality as MCP tools and make them excellent. That’s the shortest path to truly AI‑native software. It’s the clearest mandate for the next decade.

Imagine dropping Einstein into a back-office job at a random Fortune 500 company. Despite his genius, if he didn’t know what the company does or how the role works, he wouldn’t be much help.

AI systems are rapidly improving at work tasks, like summarizing notes, writing queries, and updating slides. But they suffer from the same challenge: knowing how to do work is very different from actually working at a company.

As our models continue to get smarter, how do we get them to be better at doing real jobs?

Building AI automation for enterprises is so complicated because every company operates differently. Even two businesses in the same industry can have distinct processes, systems, and decision-making.

No matter how smart foundation models get, there is no way for them to address this. It’s not an intelligence issue; it requires knowledge of companies’ internal operations, which are proprietary, idiosyncratic, and often undocumented.

So how can we make AI automations work? We need some way to:

Understand how an enterprise works,

Deliver that knowledge to an AI system, and

Maintain and update that knowledge over time.

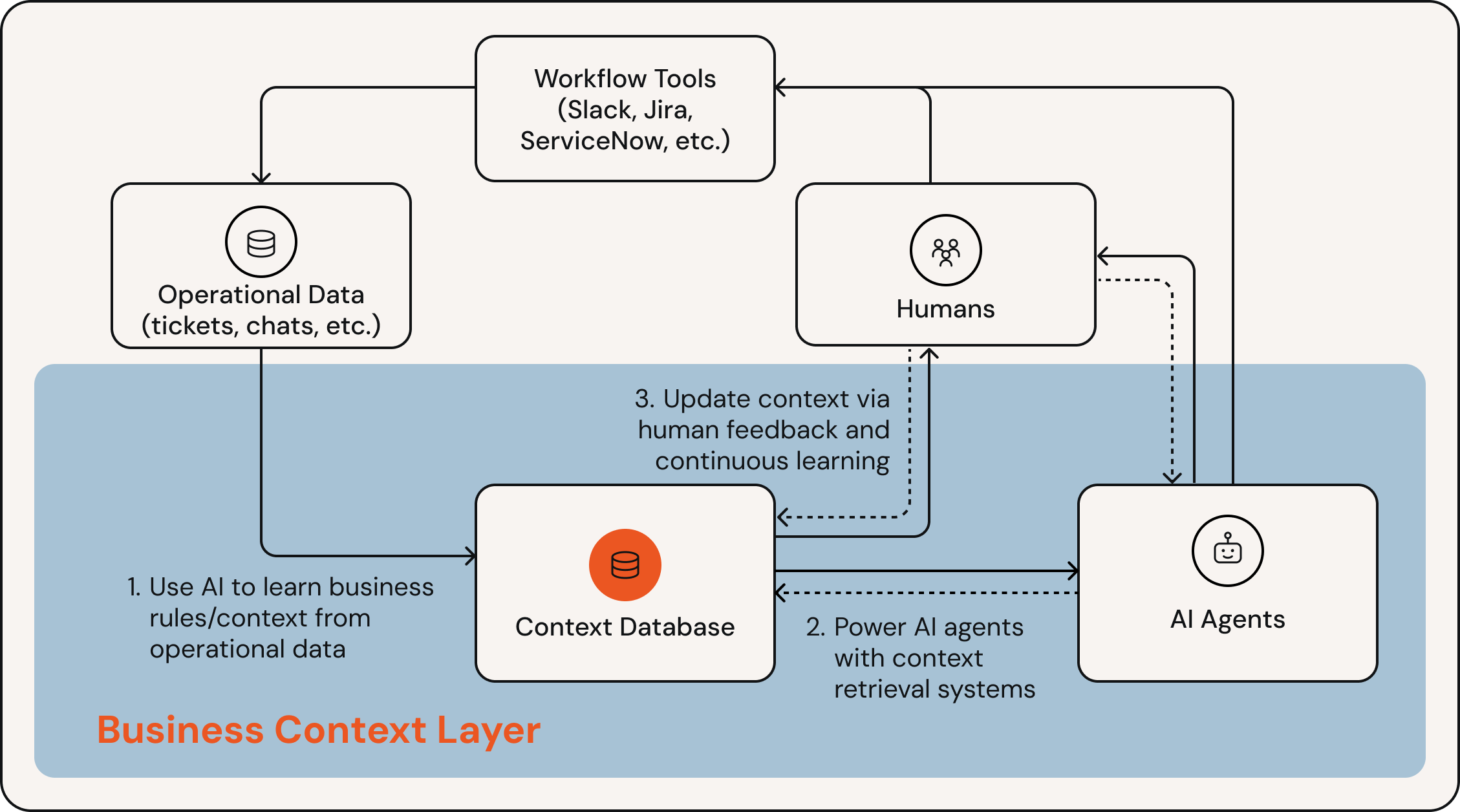

We believe this will create the first major new system of record in years: a Business Context Layer.

Today, a new customer support rep might be told to read a 100-page Standard Operating Procedure (SOP) during onboarding. The SOP includes instructions on how to run processes and handle exceptions: If a customer wants to change account information, always ask for verification. If they ask for a refund, consult these policy rules.

In most companies, these documents are incomplete, outdated, and even contradictory. This leads to teams building tribal knowledge on their own and following processes inconsistently.

When there are millions of AI agents performing complex tasks across the enterprise, we will need something much better: a living system of record that documents all of the written and unwritten rules for how a company operates, and delivers the right instructions to AI systems as they do work. We call this a Business Context Layer or BCL.

We think the key components of this platform are:

Automated context extraction/synthesis from operational data: Today’s SOPs are created manually via painstaking process mining, but a future BCL will need to do this largely automatically. This is a complex problem: a system needs to observe human activity (e.g. logs, tickets, chats, or screen recordings), infer a set of rules that describes the behavior, test those rules on real-world data, then iterate on them until they are as accurate as possible.

A retrieval system to deliver the right context to AI agents: At enterprise scale, a document describing all of the business rules would be far too large to pass the whole thing to every AI agent: it would be prohibitively slow/expensive, and you would likely suffer decreased accuracy due to context rot. An enterprise-grade BCL will need to (1) index/store this data efficiently, (2) find and deliver the context required to complete a given task, and (3) track what context was used for which queries to inform future improvements.

An excellent interface for domain experts to maintain and update context: The BCL must be constantly updated and improved as processes change. Maintaining this knowledge base will become a primary job for humans. This is a complex product to build – it’s got elements of a source control platform like GitHub, experimentation like Statsig, and user-friendly collaboration like Figma. Product & UX will be a major differentiator in the space.

In parallel to this emerging context layer, many startups are building “digital twins” of enterprise software and systems. You can let millions of AI agents loose in these simulated environments, provide a goal (e.g., “resolve these support inquiries”), and they will learn how to make business decisions and operate tools via Reinforcement Learning (RL). We think this technique is powerful, but it solves a different problem than a BCL.

Any model fine-tuning comes with trade-offs. You need the expertise and capacity to run training jobs. You need to continuously evaluate and backtest models, because fine-tuning can impact them in unexpected ways. When new models come out, you have to do the whole thing over. RL has all these challenges and more: it is notoriously difficult/unstable to train and very hard to design the appropriate scoring/reward functions, often resulting in unexpected behaviors.

For enterprise workflow automation, there are two other major limitations of the RL approach:

It is a black-box: You don’t know what the model learned or why it made a certain decision. A BCL might show a simple learned rule in text: “Anyone having trouble accessing their account should be passed on to the Customer Verification team.” But with an RL system, these learnings will be hidden in model weights.

It is not easily modified: Companies are constantly changing their processes, and AI agents will need to, too. Say you want to modify your workflow to “Anyone having trouble accessing their account should first try to reauthenticate with our new portal. If that doesn’t work, then send them to the Customer Verification team.” With a BCL, this change could be made in a few minutes in plain text (then using a testing/eval harness to evaluate the impact). With RL, you might need to update the environment, design a new reward function, re-run training, and then evaluate the impact. That is a long and arduous process.

Our hypothesis is that RL environments will play an important role, but primarily serve large research labs. Using them, foundation models will get dramatically better at doing enterprise work generally: updating CRMs, processing tickets, writing messages, etc. Companies will then use a BCL to provide instructions on how these models should do work at their business – in a human-interpretable, easily-modifiable form.

To bring a BCL to market, you need to sell outcomes, not infrastructure. Outcomes are what drive executive urgency. And most enterprises will not be capable of building complex applications with this infrastructure, even if they, in theory, could create ROI.

The clearest value proposition for a BCL is operational process automation and augmentation. Whether an enterprise buys a workflow automation platform or tries to build it internally, the performance out of the box will likely be poor due to a lack of business context. A BCL solves this problem, without requiring teams of consultants and engineers to hardcode information in prompts. It can help automate a much larger proportion of tasks, along with more reliability and controllability.

There are additional value propositions from a BCL: visibility/management (providing leaders with insight into how the organization operates) and productivity (providing front-line workers with additional context or information to do their jobs better), but we think these are secondary to core automation.

Our key questions on the future of the BCL are about the packaging and delivery model:

Any AI automation needs organization-specific context to improve performance. Today’s ascendant platforms in customer support, ITSM, sales automation, etc., are already limited by a lack of context. Right now, they solve this primarily through forward-deployed resources, but they will likely try to productize this capability over time.

Will there be a standalone context layer, or will this just be an approach/feature of each enterprise AI app? We think businesses will benefit from a single shared context layer, versus having context siloed across many separate applications, but it remains an open question.

We think an effective context layer must be created and maintained mostly autonomously. However, building end-to-end automations could still be manual – you might need process discovery to figure out what you should automate in the first place, systems/data engineering to get a solution into production, and change management when a system is deployed.

Companies like Distyl.ai are trying to build a next-generation Palantir: selling complete, services-led solutions built on top of a central platform that can drive recurring revenue and expansion use cases. We think this approach is likely to dominate in the F100, but that there is an equally exciting opportunity to build a more scalable, product-led company for the rest of the enterprise and mid-market.

If you’re thinking about business context for AI systems, we’d love to chat! Send a note to at@theoryvc.com.