Theories

Stay up-to-date on our team's latest theses and research

Stay up-to-date on our team's latest theses and research

This is the final post in our 4-part series on the LLM infra stack.

You can catch up on our last three posts on The Data Layer, The Model Layer, and The Deployment Layer.

At their core, all LLMs do is take sequences of text as input and generate sequences of text as output. But the most exciting applications of LLMs will see models not just generate text but act as agents that interact with the world.

An LLM personal assistant will book dinner reservations.

An LLM security analyst will fix permissions on a misconfigured cloud instance.

An LLM accountant will send invoices and close the books at the end of the month.

We have seen some early examples of LLMs taking action with plugins and general agent frameworks like AutoGPT.

LLM agents today are limited by model capabilities. If you ask them to do a multi-step task (e.g., finding your existing dinner reservation, canceling it, searching for a new restaurant, and booking that), they’ll often get lost along the way.

But this is a short-term problem. Foundation model providers are rapidly improving LLMs’ ability to interact with other software and services – e.g., generating reliable API queries and JSON outputs. Models are also being trained to perform better long-term planning and reasoning.

However, agents are also limited by a lack of robust user and third-party interfaces. People often think of API and user interfaces as separate categories, but here we see the problem as shared:

LLMs need robust interfaces and tools to interact with the world. This is what will transform them from text-generation machines into the operating system that will power the next generation of software.

Let’s dive in!

LLMs will interact with third-party applications to perform actions for a user or search for additional information to accomplish a task.

Imagine using a chatbot to plan a vacation. The application shouldn't just list a set of flight options – it should allow the user to book a seat and rent a car at the destination.

In the B2B world, a marketer might use an LLM to generate social media content. They shouldn’t have to copy and paste it into each channel – the LLM platform should do it for them automatically.

Over time, many platforms will release APIs designed specifically for bot/agent access. However, there are still major opportunities for new companies to facilitate these interactions:

Other applications might see LLMs interacting with software that doesn’t have available APIs. The LLM travel app can probably integrate directly with the Marriott API, but if you stay at a local bed and breakfast, it might need to click through their website.

These apps will need tooling for LLMs to navigate your computer or web browser, return data in a way the model can understand, and allow it to take action.

Today, we are in an era where most companies bolt LLMs onto their existing products and interfaces. But LLMs enable totally novel user experiences – what will true LLM-native applications look like?

Early LLM data analysis tools use a chat interface. What if the user wants to modify a table directly to demonstrate their desired transformation instead of describing it in text?

Early LLM email productivity tools generate drafts in the editing window. What if the LLM email client of the future has an agent that runs on autopilot, where the user just has an ‘inbox’ of exceptions and messages to review?

We are early in this world, but it’s clear LLMs will drive an explosion of new user interfaces and experiences.

User interfaces will also be a key source of user-generated data to feed into observability and product analytics platforms. Thoughtfully designed interfaces will generate the best data to improve an LLM system over time.

Many of these novel interaction patterns will be built by new LLM applications as product differentiators. However, many applications require similar front-end components and interactions. There is an opportunity for a new set of front-end infrastructure companies that are designed for LLMs.

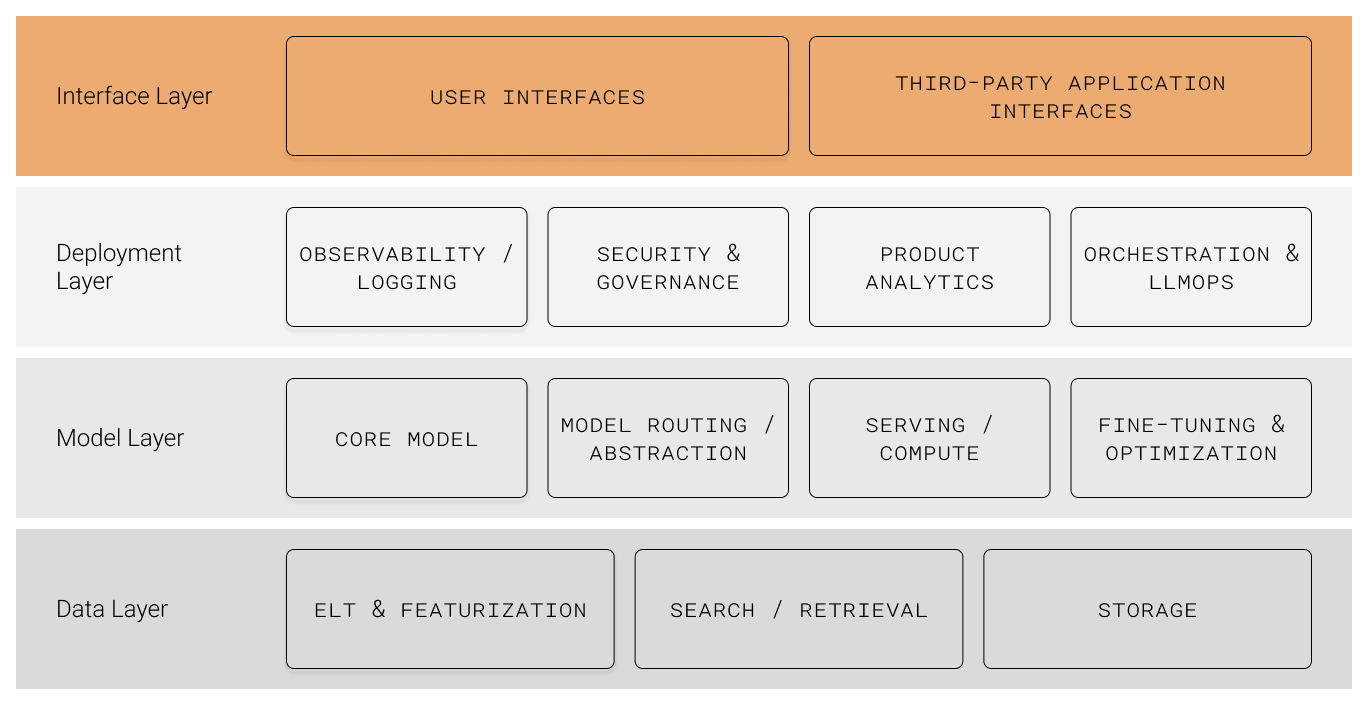

Here are the key takeaways from our LLM infrastructure research:

Practically all LLM applications need an effective Data Layer to featurize, store, and retrieve relevant information for the model to respond to a user query (while making sure it does not have access to data it shouldn’t).

Depending on the application, companies will use a mixture of self-hosted and managed service models. Many use cases will see cost and performance benefits from fine-tuning a model. For anyone not relying entirely on a foundation model-managed service, the Model Layer will require critical infrastructure to manage model routing, serving/deployment, and fine-tuning.

The unpredictable nature of LLM behaviors means post-deployment monitoring and controls are essential. The Deployment Layer will need to include security/governance instrumentation across data, model, and observability platforms. Observability/eval and product analytics tooling will enable ongoing monitoring and ad-hoc analysis. As LLM actions become more complex and multi-step, orchestration will be increasingly important.

Last, LLMs will require interfaces to interact with the world. Third-party interfaces will provide the means for LLMs to reliably navigate and perform actions in other systems. User interfaces will enable the next generation of LLM interaction patterns.

We are at the very early stages of designing this production LLM infra stack. There are over 100 early-stage startups tackling this opportunity, and we believe multiple will become multi-billion dollar companies.

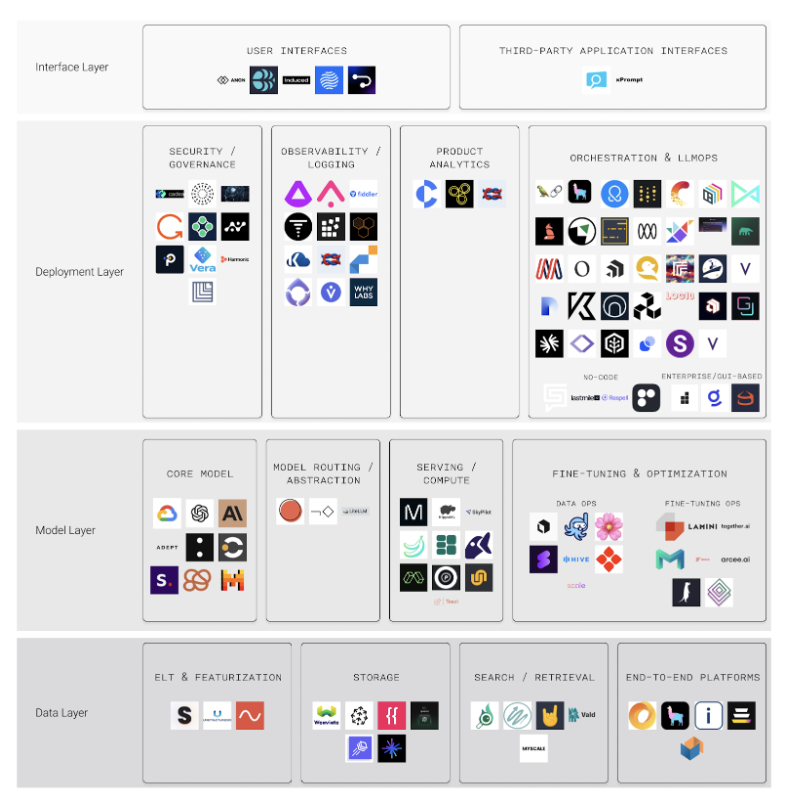

Below is a market map of the full LLM infra landscape. It’s not comprehensive and hard to put many of these companies into a single category. If you’re building in this space, we would love to hear from you.

Thanks to the many folks who provided input on this article series and to Ben Scharfstein, Dan Shiebler, Phil Cerles, and Tomasz Tunguz for detailed comments and editing!

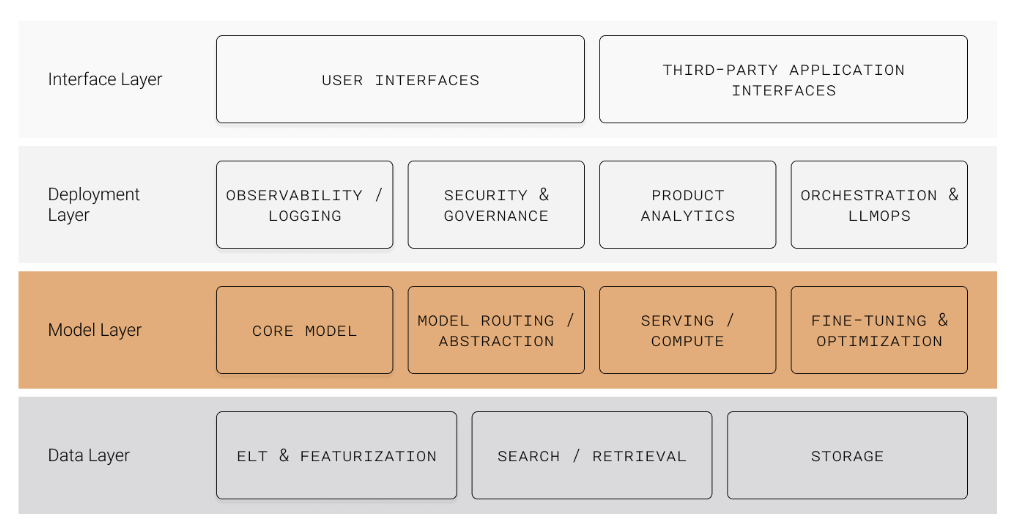

This is the third post in our 4-part series on the LLM infra stack. You can catch up on our last two posts on the Data Layer and Model Layer. If you are building in this space, we’d love to hear from you at info@theory.ventures.

LLMs allow teams to build transformative new features and applications. However, bringing LLM features to production creates a new set of challenges.

LLMs:

Product owners will feel these issues acutely: it may be easy to build a demo, but it is hard to get that feature to work reliably in production.

LLM product launches also pull in other teams. Security and compliance teams will be concerned about the risks LLMs introduce. Platform engineering teams will need to make sure the system can run performantly without exploding the cloud budget.

A new generation of infrastructure will be required to observe and manage LLM behavior. When we talk to executives who are building LLM features, this layer is often the main blocker for deployment.

The key components of the Deployment Layer include:

Security and governance

LLMs create frightening new security and safety vulnerabilities. They take untrusted text (e.g. from user inputs, documents, or websites) and effectively run it as code. They are often connected to data or other internal systems. Their behavior is unpredictable and hard to evaluate.

It’s easy to imagine a variety of ways this can go wrong.

If you’re building a chat support agent, a malicious user could ask the bot to leak details about someone else’s account.

If you’re building an LLM assistant that’s connected to email and the web, someone could make a malicious website that, when browsed, would instruct the LLM agent to send spam from your account.

Anyone seeking to harm a company's brand image could convince its LLM to write inappropriate or illegal content and post a screenshot online.

Today, there is no known way to make LLMs safe by design.

Large foundation models like GPT-4 are trained specifically to avoid bad behavior but can be tricked into breaking these rules in minutes by a non-expert. If these large models can be fooled, it’s not clear why a smaller supervisor model would catch an attack.

There is some emerging research around new architectures, like a dual-LLM system where one LLM system deals only with risky external data and the other deals only with internal systems. This work is still in its infancy.

So what is a product owner to do? The 33% of companies that say security is a top issue (Retool 2023 State of AI) can’t be paralyzed waiting for these issues to be solved. While the state of the art evolves, companies should take the traditional approach of defense in depth.

To do this, product owners can implement security and governance controls throughout the LLM stack.

At the Data Layer, they will need data access and governance controls so that LLMs can only ever access data that is appropriate to the user and context.

At the Model Layer, supervisor/firewall systems can try to identify and block things like PII leakage or obscene language. These systems will have limited effectiveness today: it is not hard to circumvent them, and model size will be limited due to latency. But it’s a place to start.

Post-deployment monitoring systems should flag anomalous or concerning behavior for review.

There’s a lot to dig into in this space. We’re doing more research and expect to share a model security/governance-specific blog post in the coming months.

Observability/evaluation

LLMs show non-deterministic behavior that is sensitive to changes in model inputs as well as underlying infrastructure and data.

Today, product teams try to identify edge cases and bugs pre-launch. Most common issues are deterministic: the code for a button is broken, then it gets fixed. There are usually few enough features that they can be tested manually.

LLM systems must respond to infinite variations of input text and documents. Manually testing them all would be impossible. Teams will need evaluation tooling to measure performance programmatically. This might involve re-running historical queries or generating synthetic ones. They will need to convert unstructured outputs into qualitative and quantitative metrics. Because model outputs can change dramatically based on surrounding components, each test example will need to be traced throughout the LLM infra stack.

Post-launch, today’s observability platforms monitor for performance degradation, system outages, or security breaches.

The high cost and complexity of running LLMs (described in The Model Layer) make this observability critical. On top of this, LLM observability platforms must provide real-time insights into LLM inputs and outputs. They will need to identify anomalous responses – e.g., ones that are zero-length or just a single repeating character. They can search for potential data loss or malicious use to flag to security teams. They also can evaluate responses for content and qualitative measures of correctness or desirability.

These systems (along with product analytics described below) will be the foundation for a new workflow that must be created in LLM product orgs. Tracking poor responses will create an evaluation dataset of edge cases and undesired behavior.

These issues will need to go to a cross-functional team since it will often not be clear what change(s) are required to fix it. A data engineer might need to fix an issue in the data retrieval system. A different engineer might need to fine-tune or switch to a different model. A product or marketing person might need to change the natural language prompt instructions.

These workflows don’t yet exist, but we think will be a core competency for companies building the future generation of LLM products.

Traditional ML observability platforms serve some of these functions today. However, there are unique characteristics of LLMs:

We believe these will be best served by a purpose-built platform.

Product analytics

Product analytics for LLM systems look different from the analyses product teams conduct today.

Today’s product analytics are designed to track a series of discrete user actions. On a traditional customer support page, a user might open section A and then click on subheader B.

LLM applications must track very different usage patterns. For a customer support chatbot, every user action might be the same: a sent message. How should a PM analyze which help sections the users are asking about? How can they evaluate the quality of the chatbot’s responses?

LLM applications are also built with complex systems. Let’s say the chatbot’s answer didn’t satisfy the user. Did it not understand the question? Did the data retrieval system not find the right document for the LLM to refer to? Or did the model just make a reasoning error in its generation?

LLM analytics platforms will need to be built for this new paradigm. They will use intelligent systems (often language models themselves) to categorize and quantify unstructured data. They will also provide interfaces and workflows to evaluate individual examples and trace issues through the system.

LLM product analytics platforms share many core capabilities with LLM observability/evaluation tools. Differences in user types and workflows (e.g., platform engineers monitoring latency vs. product managers analyzing user flows) might require separate platforms. But it’s possible these two categories converge.

Orchestration & LLMOps

LLM applications will often need to coordinate multiple processes to complete a task.

Let’s say I am building an LLM-based travel agent. To plan an activity, it might need to:

Simple implementations can be built in the application code itself. More complex systems will require purpose-built platforms to orchestrate all of these actions and maintain memory/state throughout.

As a control plane, there’s a natural opportunity to expand control to more components of the LLM infra stack. We call these companies “LLMOps” broadly. There are about 40 of them today.

LLMOps companies include a variety of features across the LLM infra stack. Some are focused on production inference, while others also provide tooling for fine-tuning. There are sub-categories of LLMOps companies focused on developer tools vs. low/no-code business applications.

Some companies will choose LLMOps platforms for convenience. With limited team capacity, they may be willing to pay extra or give up flexibility for an end-to-end solution.

It’s also possible that end-to-end LLMOps platforms can be more effective than standalone components. For example, they might integrate an observability platform with data storage and model training infrastructure to facilitate regular fine-tuning. They also might be easiest to instrument with security or governance controls.

In the modern data stack, we have seen best-in-breed modular components emerge. We expect that for most companies building LLM applications, the same will be true.

If you are building in this space, we’d love to hear from you at info@theory.ventures. In our next post, we’ll explore the Interface Layer.

This is the second post in our 4-part series on the LLM infra stack. You can catch up on our last post on the Data Layer here. If you are building in this space, we’d love to hear from you at info@theory.ventures.

The core component of any LLM system is the model itself. They provide the fundamental capabilities that enable new products.

LLMs are foundation models: models trained on a broad set of data that can be adapted to a wide range of tasks. They tend to fall into two categories: large, hosted, closed-source models like GPT-4 and PaLM 2 or smaller, open-source models like Llama 2 and Falcon.

It’s unclear which models will dominate. The state of the art is changing rapidly – a new best-in-class model is announced monthly. So few products have been built with LLMs to test trade-offs. There are two emerging paths:

1. Large foundation models dominate: Closed-source LLMs continue to provide capabilities other models can’t match. A managed service offers simplicity & rapid cost reduction. These vendors solve security concerns.

2. Fine-tuned models provide the best value: Smaller, less-expensive models prove just as good for most applications after fine-tuning. Businesses prefer them because they control the model and can create intellectual property.

Large foundation models will work best for use cases that require broad knowledge and reasoning. Asking a model to plan a month-long vacation itinerary in Southeast Asia for a vegetarian couple interested in Buddhism requires planning and lots of working memory. These models are also best for new tasks where you don’t have data.

Smaller, fine-tuned models will excel in products composed of repeatable, well-defined tasks. They’ll perform just as well as larger models for a fraction of the cost. Need to categorize customer feedback as positive or negative? Extracting details from an email into a spreadsheet? A smaller model will work great.

Which models are safer and more secure? Which are best for compliance and governance? It’s still unclear.

Many companies would prefer to self-host their models to avoid sending their data to a third party. However, emerging research suggests fine-tuning may compromise safety. Foundation models are tuned to avoid inappropriate language and content. LLMs, especially smaller ones, can forget these rules when fine-tuned. Large model providers may also find it easier to demonstrate that their models and data are compliant with nascent regulations. More work needs to be done to establish these conclusions.

In the long term, we can’t predict how LLMs will develop. A large research org could discover a new type of model that is an order of magnitude better than what we have today.

Progress in model development will drastically change the structure of the Model Layer. Regardless of the direction, it will have the following key components:

Core model:

Training LLMs from scratch (also known as pre-training) costs hundreds of thousands to tens of millions of dollars for each model. OpenAI is said to have spent over $100 million on GPT-4. Only companies with huge balance sheets can afford to develop them.

Conveniently, fine-tuning a pre-trained model with your own data can provide similar results at a fraction of the cost. This can cost well under $100 with data you already have on hand. During inference, fine-tuned models can be faster and an order of magnitude cheaper to boot.

Fine-tuning is supported by a rapidly improving set of open-source models. The most capable ones, like Meta’s Llama 2, perform similarly to the best LLMs from 6-12 months ago (e.g., GPT-3.5). When fine-tuned for a concrete task, open-source models often reach near-parity with today’s best closed-source LLMs (e.g., GPT-4).

Serving/compute:

Training LLMs is expensive, but inference isn’t cheap, either. LLMs require an extreme amount of memory and substantial computing. For a product owner experimenting on OpenAI, each GPT-3.5 query will cost $0.01-0.03. GPT-4 is an order of magnitude higher at up to $3.00 per query.

If you try to self-host for privacy/security or cost reasons, you won’t find it easy to match OpenAI. Virtual machines with NVIDIA A100 GPUs (the best one for most large foundation models) run $3.67 to $40.55 per hour on Google Cloud. Availability of these machines is very limited and sporadic, even through major cloud providers.

For LLM applications with thousands or millions of users, product owners will face new challenges. Unlike most classic SaaS applications, they’ll need to think about costs. At $3 per query, it’s hard to build a viable product. Similarly, users won’t hang around long if it takes 30 seconds to return an answer.

It will be critical to serve LLMs in a performant and cost-effective way. Even “smaller” models described above still have billions of parameters.

How can you reduce cost and latency in production? There are lots of approaches. On the inference side, batch queries or cache their results. Optimize memory allocation to reduce fragmentation. Use speculative decoding (when a smaller model suggests tokens and a large model “accepts” them). Infrastructure usage can be optimized by comparing GPU prices in real-time and sending workloads to the cheapest option. Use spot pricing if you can manage spotty availability and failovers.

Most companies won’t have in-house expertise to do all these themselves. Startups will rise to fill this need.

Model routing/abstraction:

For some LLM applications, it will be obvious which model to use where. Summarize emails with one LLM. Transform the data to a spreadsheet with another.

But for others, it can be unclear. You might have a chat interface where some customer messages are simple, and others are complex. How will you know which should go to a large model vs. a small one?

For these types of applications, there may be an abstraction layer to analyze each request and route it to the best model.

As a product owner, it is already difficult to evaluate the behavior of an LLM in production. If your system might route to many different LLMs, that problem could get even more challenging.

However, if dynamic routing improves capabilities and performance, the trade-off might be worth it. This layer could almost be thought of as a separate model itself and evaluated on its own merit.

Fine-tuning & optimization:

As we wrote about above, many product owners will fine-tune a model for their use case.

For most use cases, fine-tuning will not be a one-off endeavor. It will start in product development and continue indefinitely as the product is used.

The key to great fine-tuning will be fast feedback loops. Today, product owners triage user feedback/bugs in code and ask engineers to fix them. For LLM applications, product owners will monitor qualitative and quantitative data on LLM behavior. They will work with engineers, data ops teams, product/marketing, and evaluators to curate new data and re-fine-tune models.

We’ll discuss post-deployment monitoring more in our next post on the

Deployment Layer:

To fine-tune a model, there are two major components. There is an important cohort of LLM infrastructure companies serving each of them.

If you are building in this space, we’d love to hear from you at info@theory.ventures! In our next post, we’ll explore the Deployment Layer.